AI is a game-changer in every sector. AI risk management is a systematic approach to identifying, assessing, and mitigating the risks of AI systems throughout their lifecycle. Companies are evolving approaches to create a systemic culture of data in everything to minimize complexities. Still, as companies continue to rely on AI for driving innovation and competitive advantage, it is critical to not lose sight of the inherent risks that need to be balanced, enabling these technologies to deliver value safely and responsibly.

The increasing use of AI technologies raises unique challenges that substitute those tied to conventional IT infrastructures. AI models can behave in strange ways, amplify existing biases in the data it is trained on, raise complex privacy issues, and be somewhat of a black box in terms of understanding their decision-making processes. It encompasses systematic approaches to risk identification, prevention, and mitigation that ensure organizations can use the power of AI without falling victim to its threats.

Strong risk management positions organizations to effectively manage the complexities of AI deployment while upholding trust, regulatory compliance, and ethical standards in a rapidly evolving AI world.

AI risk management encompasses the structured processes and methodologies organizations implement to identify, evaluate, and address risks specifically associated with artificial intelligence systems. It extends beyond traditional risk management approaches by addressing the unique challenges posed by AI technologies, including algorithmic bias, lack of explainability, data privacy concerns, and potential autonomous behavior that may deviate from intended purposes. This discipline integrates technical expertise with governance frameworks to ensure AI deployments align with organizational objectives while minimizing potential harms.

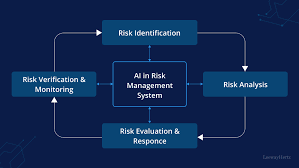

At its core, AI risk management involves continuous assessment throughout an AI system’s lifecycle, from initial design and development through deployment and ongoing operation. This includes evaluating training data for potential biases, scrutinizing algorithmic decision-making processes, testing systems for robustness against adversarial attacks, and monitoring for performance drift over time. The goal is to create a balanced approach that enables innovation while establishing appropriate guardrails to prevent unintended consequences.

The scope of AI risk management extends beyond technical considerations to encompass ethical, legal, and regulatory dimensions. Organizations must consider how AI systems impact stakeholders, including customers, employees, and society at large. This requires cross-functional collaboration among data scientists, legal experts, ethicists, business leaders, and risk professionals to develop comprehensive strategies that address both technical vulnerabilities and broader societal implications.

As AI systems become further integrated into critical infrastructure and business processes, this type of proactive management is not only helpful but necessary.

AI systems can fail in ways traditional software does not. Without risk management, the outcomes of AI may prove harmful and unsought at the development phase. When applied in high-stakes areas such as healthcare diagnostic tools, autonomous vehicles, and financial services, these failures can have serious implications for human safety, financial stability, and the reputation of organizations.

The ethical implications of complex AI systems are more pronounced to the extent that those systems grow in power. AI risk management frameworks provide a structured approach to assess if systems align with appropriate ethical principles and organizational values. That includes making sure that AI applications respect human autonomy, promote fairness, and operate transparently.

AI systems are trained on historical data, which is often skewed with societal biases. With improper management, these systems can reinforce, or even magnify, discrimination against protected groups. Comprehensive risk-management processes also support organizations in identifying potential sources of bias at every stage of the AI lifecycle, from data collection and model development to deployment and monitoring.

AI systems have multidimensional risk profiles that are unlike traditional technology. The distinct risk categories require a proper understanding by the organizations to develop effective mitigation plans.

AI systems are subject to unpredictable performance issues, such as model drift, where accuracy may degrade over time as real-world conditions shift from what the model was trained on. Things like robustness challenges, where small changes to inputs can lead to radically different outputs, and scalability challenges, when a model behaves differently in production than it did during a controlled testing environment, are also technically classified as risks.

AI systems may inadvertently embed or bolster social biases existing within the data they were trained on, resulting in discriminatory results that affect vulnerable groups. Such biases can appear in hiring algorithms that preferentially select for particular demographic groups, facial recognition technology with differential accuracy on disparate ethnic groups, or lending portfolios that perpetuate existing patterns of economic exclusion.

AI solutions have unique security vulnerabilities, such as being vulnerable to adversarial attacks where slight, deliberate perturbations of input data can result in catastrophic errors or misleading outputs. At the same time, privacy is a huge issue, as many AI programs require a large amount of personal data to be trained or put to work, creating opportunities for this information to fall into the wrong hands.

The landscape of AI regulation is rapidly evolving around the world, and it is characterized by emerging frameworks that call for varying levels of transparency, etc., in algorithmic systems. Organizations that deploy AI risk liability for algorithmic decisions that cause harm, violate discrimination laws, or do not meet emerging norms for AI governance.

Integrating AI systems gives rise to high-risk operational costs, such as reliance on scarce technical resources, building complicated infrastructure, and business process interference. Costs can be high, and returns can be uncertain, especially when organizations overestimate the capabilities of AI or underestimate the challenges of implementation.

In order to accurately detect AI risks, a holistic approach needs to be taken that starts from the very initial stages of system development.

Organizations must establish structured risk assessment frameworks tailored for AI systems that merge conventional risk management practices with specialized techniques aimed at tackling AI-specific challenges. This usually means cross-functional teams of individuals with varied expertise performing systematic assessments across the full AI lifecycle, from concept and data selection through development, testing, deployment, and operations.

Such audits ought to include evaluations of both technical elements of the system that cover algorithm choice, data quality, and model performance, as well as broader contextual elements such as use-case scenarios, relevant stakeholders, and the contexts of deployment.

Many of the risk assessment methods used for AI systems are based on scenario planning and red-teaming exercises to try and identify failure modes and edge cases that wouldn’t normally be caught through normal testing. These techniques intentionally put systems through a stress test by introducing adversarial inputs, unexpected user actions, and shifting environmental conditions to find vulnerabilities.

When it comes to evaluating risks on different dimensions, organizations must implement both quantitative and qualitative metrics encompassing different aspects such as reliability, bias and fairness, explainability, security, and privacy. The measurement framework allows for cohesive risk assessment and prioritization by not only the likelihood of adverse events occurring but also the severity of those events.