In an era where artificial intelligence increasingly shapes decisions in finance, healthcare, hiring, and more, the need for fairness in machine learning has never been more critical. While AI promises efficiency and objectivity, it often inherits and amplifies human biases present in data. This is where bias mitigation filters come into play—serving as the first line of defense against unfair AI outcomes.

What Are Bias Mitigation Filters?

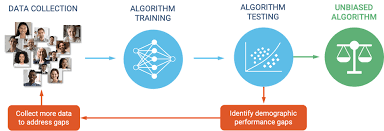

Bias mitigation filters are AI preprocessing tools designed to reduce or eliminate bias before machine learning models even begin training. These filters operate on raw data, identifying imbalances and patterns that could lead to discriminatory results. By adjusting or transforming the data beforehand, these tools aim to create a more level playing field for all individuals represented in the dataset.

For example, if a loan approval model consistently favors one demographic group over another, a bias mitigation filter can help correct this by reweighting samples, modifying labels, or resampling data in a way that preserves the integrity of the original information while promoting fairness.

Why Bias Exists in AI

AI models learn from data. If that data reflects historical discrimination or social inequalities, the model may perpetuate or even amplify those biases. In some cases, sensitive attributes like race, gender, or age may not be explicitly included in the dataset, but proxies (such as ZIP code or education level) can still carry those signals. Without bias mitigation, the resulting models risk producing unfair outcomes—even when fairness is a stated objective.

Types of Bias Mitigation in Preprocessing

There are several methods used in the preprocessing phase to enhance fairness in machine learning:

Reweighing: Assigns weights to instances to ensure that each group is equally represented during training.

Sampling Techniques: Undersampling overrepresented groups or oversampling underrepresented ones to balance the dataset.

Feature Modification: Alters or removes features that may introduce bias.

Fair Representation Learning: Transforms the dataset into a new representation that obfuscates sensitive information while retaining predictive power.

These AI preprocessing tools are not a silver bullet but are essential steps in a larger fairness pipeline.

The Role of Bias Mitigation Filters in Fair AI

Bias mitigation filters are crucial because they operate before any modeling begins. By cleansing the data at the source, they help ensure that fairness is built into the model from the ground up. They are particularly important when:

Training datasets are derived from historically biased processes.

Regulations require demonstrable fairness and transparency (such as in credit scoring or employment).

The impact of biased predictions could be harmful or discriminatory.

While bias mitigation can also happen during or after model training (through in-processing and post-processing techniques), preprocessing filters are often the most accessible and broadly applicable approach.

Challenges and Considerations

Implementing bias mitigation filters comes with its own set of challenges:

Trade-offs: Enhancing fairness may come at the cost of accuracy or other performance metrics.

Complexity: Identifying bias is not always straightforward; it requires domain knowledge and careful analysis.

Ethical Decisions: Choices around which metrics to prioritize (e.g., demographic parity vs. equal opportunity) can be contentious.

Despite these challenges, the use of preprocessing tools for bias mitigation is a necessary and proactive approach in the quest for ethical AI.

Conclusion

Bias mitigation filters are an essential part of ensuring fairness in machine learning. As the first defense against unfair AI, these tools help detect and correct imbalances in data before they translate into biased predictions. With increasing reliance on AI systems across high-stakes domains, the importance of responsible data preprocessing cannot be overstated.

By embracing these techniques early in the AI lifecycle, developers and organizations take a crucial step toward building systems that are not only intelligent but also just.