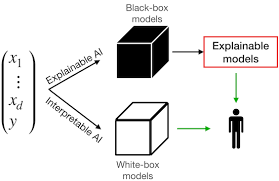

As artificial intelligence continues to shape decisions in healthcare, finance, criminal justice, and beyond, the need for transparency has never been more urgent. Many AI models, particularly deep learning systems, are often criticized as “black boxes”—they produce outputs without offering insights into how those conclusions were reached. In mission-critical or ethically sensitive areas, this opacity can be dangerous. That’s where explainable AI (XAI) enters the conversation, transforming black-box models into more interpretable “glass boxes.”

The Need for Explainable AI

Explainable AI refers to methods and techniques that make the outcomes of AI systems understandable to humans. While performance metrics like accuracy and precision remain essential, they aren’t enough when stakeholders—including data scientists, regulators, and end-users—need to trust the system’s decisions.

For instance, if an AI system denies someone a loan or suggests a particular cancer diagnosis, both the subject and the provider deserve to understand why. This is not just a matter of trust, but also one of accountability and fairness. Transparent AI models help identify bias, ensure compliance with legal standards, and foster public trust.

LIME and SHAP: Popular Tools for Model Interpretability

Among the most widely used AI transparency tools are LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations). These tools are designed to explain the predictions of any machine learning model in ways that are both accurate and intuitive.

LIME works by perturbing input data slightly and observing how predictions change. It then builds a local surrogate model—often a linear one—to approximate the behavior of the black-box model around a specific prediction. This approach helps users understand which features most influenced that decision.

SHAP, on the other hand, is based on cooperative game theory. It assigns each feature a “Shapley value” to quantify its contribution to the model’s output. Unlike LIME, SHAP offers consistent, theoretically grounded explanations across the entire dataset, not just locally.

Both tools have been crucial in making complex models more interpretable, and their growing adoption reflects the rising demand for explainability.

Beyond Tools: A Shift in AI Development

While LIME and SHAP are powerful, explainable AI is more than just implementing specific tools. It requires a shift in how AI systems are designed, developed, and deployed. Developers must prioritize transparency from the outset—choosing interpretable models when possible, documenting design decisions, and engaging with domain experts and affected communities.

Moreover, explainability is not a one-size-fits-all solution. What is considered “understandable” can vary between a data scientist, a doctor, and a layperson. Effective AI transparency tools must adapt explanations to suit different audiences, ensuring they are both informative and accessible.

The Road Ahead

The push from black-box to glass-box AI is not just a technical challenge; it’s a societal imperative. As regulations like the EU’s AI Act and the U.S. Blueprint for an AI Bill of Rights gain traction, organizations that invest in explainable AI will be better positioned to meet both ethical and legal expectations.

In the long run, the goal isn’t merely to decode AI decisions after the fact, but to build systems whose reasoning is inherently clear—an AI that not only predicts, but explains.