As artificial intelligence technologies evolve at breakneck speed, so too does the urgency to regulate them. From biased facial recognition to algorithmic decision-making in finance and healthcare, the global community is grappling with the profound implications of AI on human rights, safety, and equity. In response, a pressing question has emerged: can we establish a global framework for AI accountability that functions like a United Nations of algorithms?

The Fragmented Landscape of Global AI Regulation

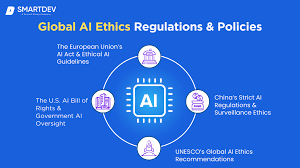

Currently, global AI regulation is anything but unified. The European Union has taken the lead with the AI Act—setting strict standards for high-risk applications and requiring transparency for algorithms impacting civil liberties. Meanwhile, the United States has adopted a more decentralized and industry-led approach, relying on existing laws and voluntary guidelines. China, on the other hand, emphasizes national security and state control over AI governance.

This divergence creates a regulatory patchwork that complicates enforcement, especially for multinational corporations developing AI tools used across borders. Without harmonized global standards, there’s a growing risk of regulatory arbitrage—where companies exploit loopholes in less regulated jurisdictions to deploy potentially harmful AI systems.

The Rise of AI Audit Frameworks

To address these challenges, countries and organizations are turning to AI audit frameworks—structured methods for assessing the risks, fairness, transparency, and performance of AI systems. These audits can be internal or conducted by third parties, and they often cover areas such as data governance, bias detection, explainability, and compliance with ethical guidelines.

Notable examples include the U.S. National Institute of Standards and Technology (NIST) AI Risk Management Framework, the EU’s AI assessment requirements under the AI Act, and various private-sector tools like OpenAI’s model evaluations and Google’s Model Cards. These frameworks aim to operationalize ethical AI principles, but their effectiveness hinges on standardization and enforcement.

Toward AI Accountability Worldwide

Creating AI accountability worldwide means more than just agreeing on principles—it requires robust, transparent mechanisms for enforcement and recourse. International cooperation will be critical to achieve this. The question is whether such cooperation can realistically take shape in today’s fragmented geopolitical climate.

One potential solution is to form a global AI governance body under the auspices of the United Nations or a similar international institution. This body could develop baseline standards for AI audits, facilitate cross-border investigations, and provide guidance on best practices. It could also serve as a forum for dispute resolution when AI systems cause harm across jurisdictions.

However, achieving this level of coordination will require trust, data-sharing agreements, and the political will to prioritize human rights and ethics over commercial or strategic interests.

A United Nations of Algorithms: Aspirational or Achievable?

The vision of a “United Nations of algorithms” is ambitious. Yet the stakes are too high to ignore. As AI systems become more autonomous and integrated into the fabric of global society, accountability must keep pace. Without it, we risk entrenching inequalities, eroding public trust, and losing control over technologies that shape everything from justice systems to climate policy.

Global AI regulation doesn’t need to be monolithic, but it does need to be interoperable. Common auditing standards, data-sharing protocols, and international oversight mechanisms could provide the scaffolding for a safer AI future.

Whether this vision is realized through an international treaty, a coalition of tech companies and governments, or a new institution altogether, one thing is clear: a globally coordinated approach is no longer optional—it’s essential.