As artificial intelligence continues to reshape modern life, one of the most controversial applications is AI surveillance. Governments, corporations, and even private individuals now have access to tools capable of monitoring behavior, recognizing faces, tracking movements, and analyzing data on an unprecedented scale. While these technologies promise improved security and efficiency, they also raise profound concerns about privacy ethics and civil liberties. So, where should we draw the line?

The Rise of AI Surveillance

AI surveillance systems use machine learning and data analytics to interpret information from cameras, microphones, and digital communications. In many cities around the world, AI-driven cameras monitor public spaces for unusual activity. In workplaces, algorithms can track employee productivity. In retail, facial recognition is used to identify repeat customers—or potential shoplifters.

Supporters argue that these tools improve safety and convenience. Law enforcement agencies, for example, claim that AI surveillance helps prevent crime and find missing persons. Businesses see it as a way to enhance customer service or reduce losses.

However, this growing capability also introduces serious risks.

Privacy Ethics in the Digital Age

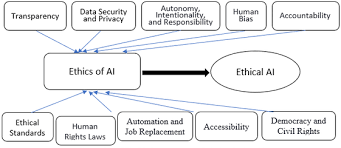

At the core of the debate is the issue of privacy ethics—how to balance the benefits of surveillance with the rights of individuals. Should people be monitored without their knowledge or consent? How long should surveillance data be stored? Who has access to it?

Critics argue that constant monitoring can create a chilling effect, discouraging free expression and behavior. When individuals feel watched, they may begin to self-censor, even if they have nothing to hide. In democratic societies, this threatens the very foundation of personal freedom and autonomy.

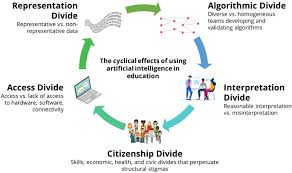

Moreover, marginalized communities are often disproportionately targeted by surveillance systems, raising concerns about bias and discrimination embedded in AI algorithms.

The Dangers of Facial Recognition

One of the most controversial aspects of AI surveillance is facial recognition technology. Although it can identify individuals in a crowd with impressive accuracy under ideal conditions, real-world use is far less reliable—especially for people of color, women, and non-binary individuals. These facial recognition risks have already led to wrongful arrests and cases of mistaken identity.

Even beyond accuracy concerns, facial recognition poses deep ethical questions. Unlike other forms of identification, your face is inescapable. You can’t change it, you can’t leave it at home, and you can’t opt out if you’re walking down the street where cameras are installed.

When facial recognition is used without clear regulation or oversight, it becomes a powerful tool for authoritarian control, mass surveillance, and social manipulation.

Drawing the Line: Principles for Ethical Use

So, where should we draw the line?

- Transparency – Organizations using AI surveillance must be open about what they’re collecting and why. Individuals should know when they’re being watched.

- Consent – Where possible, people should have the option to opt in or out of surveillance. In public spaces, this may involve clear signage or restricted data use.

- Accountability – There must be clear rules on who has access to surveillance data and how it is used. Misuse should carry legal consequences.

- Bias Auditing – AI systems, particularly those using facial recognition, must be tested regularly for bias and corrected accordingly.

- Limits on Use – Not every application of surveillance is ethically justified. Some areas—such as protests, religious gatherings, or private homes—should be off-limits.

Conclusion

AI surveillance is here to stay, but that doesn’t mean it should be used without limits. As the technology advances, the need for robust ethical frameworks becomes more urgent. By addressing privacy ethics head-on and acknowledging the facial recognition risks, we can begin to shape policies that protect both innovation and individual freedoms. Drawing the line isn’t just a technical decision—it’s a moral one, and the time to decide is now.