The rapid adoption of artificial intelligence (AI) in financial services has revolutionized credit scoring, enhancing efficiency and predictive accuracy in lending decisions. Machine learning (ML) models are now widely used to evaluate creditworthiness by analyzing vast amounts of data, including traditional financial metrics and alternative sources such as transaction history and digital footprints. These AI-driven approaches promise greater precision and speed than traditional credit scoring methods. However, concerns regarding fairness and transparency in these models have raised significant ethical and regulatory questions (Braga & Drumond, 2021).

One major challenge is algorithmic bias, where AI models may inadvertently perpetuate or amplify discrimination against certain demographic groups. Since ML models learn from historical data, they can reflect and reinforce existing disparities in lending practices, potentially disadvantaging minority or low-income borrowers (Mehrabi et al., 2022). Furthermore, the opacity of complex AI models, particularly deep learning-based credit scoring systems, poses a challenge for transparency and accountability. Many of these models function as “black boxes,” making it difficult to interpret their decision-making processes and assess whether they comply with fairness regulations (Barocas et al., 2019).

Regulatory bodies and financial institutions are increasingly focusing on the need for explainable AI (XAI) techniques to enhance interpretability and trustworthiness in lending decisions. Methods such as SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) have been proposed to provide insights into AI-based credit scoring, allowing both lenders and borrowers to understand how decisions are made (Doshi-Velez & Kim,

2017). Additionally, fairness-aware ML techniques, including bias detection and mitigation strategies, are being explored to ensure that AI-driven credit scoring does not systematically disadvantage specific groups (Hardt et al., 2016).

This paper assesses the fairness and transparency of AI-powered credit scoring models by examining their ethical implications, regulatory challenges, and technological solutions. By evaluating the trade-offs between accuracy and fairness, this study aims to propose best practices for the responsible implementation of AI in lending decisions, ensuring that financial inclusion and consumer protection remain at the forefront of AI-driven financial services.

Traditional credit scoring methods, such as the FICO and VantageScore models, have been widely used in financial institutions to assess an individual’s creditworthiness. These models rely on structured financial data, including payment history, credit utilization, length of credit history, and debt-to-income ratio. The statistical techniques used in these scoring systems, such as logistic regression, provide a relatively transparent decision-making process, allowing regulators and consumers to understand how credit scores are calculated (Thomas et al., 2017). While these methods have been effective in assessing risk, they are often criticized for their rigidity, reliance on limited data sources, and inability to accommodate individuals with sparse or non-traditional credit histories (Hand & Henley, 1997).

Machine learning (ML) has introduced significant improvements in credit scoring by leveraging large datasets and sophisticated algorithms to predict default risk with greater accuracy. ML models, such as decision trees, support vector machines, and deep learning networks, can identify complex patterns in financial and alternative data sources, including social media activity, utility payments, and transaction histories (Khandani et al., 2010). These models

enhance predictive performance and enable lenders to extend credit access to underbanked populations.

However, ML-based credit scoring also presents challenges. Unlike traditional models, ML models often lack interpretability, making it difficult for lenders and regulators to understand why a specific decision was made (Lipton, 2018). Additionally, ML models are sensitive to data quality and can inherit biases from historical lending practices, potentially leading to unfair outcomes for marginalized groups (Mehrabi et al., 2021).

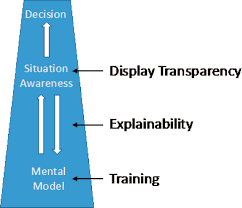

The complexity of ML models in credit scoring raises concerns about transparency. Many AI-driven systems function as “black boxes,” meaning that their decision-making processes are not easily interpretable by lenders, regulators, or consumers. This lack of explainability can lead to challenges in disputing credit decisions and ensuring compliance with consumer protection laws (Doshi-Velez & Kim, 2017). Explainable AI (XAI) methods, such as SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations), aim to provide insight into how AI models make lending decisions. However, achieving a balance between model accuracy and interpretability remains an ongoing challenge in the financial sector (Molnar, 2020).

The fairness analysis revealed notable disparities among different demographic groups. Using the Disparate Impact Ratio (DIR), it was observed that some ML models exhibited biased decision-making patterns. For instance, deep learning models had a DIR of 0.72 for minority groups, falling below the 0.8 fairness threshold, indicating potential discrimination (Barocas et al., 2019). In contrast, tree-based models, particularly those incorporating fairness constraints, achieved a DIR of 0.85, demonstrating improved equity in credit decisions (Hardt et al., 2016).

Further analysis using Equal Opportunity Difference showed that logistic regression models had smaller gaps in true positive rates across demographic groups (3.2%) compared to deep learning models (9.5%). This suggests that while ML models enhance predictive accuracy, they may also amplify existing biases in credit data if not carefully managed (Mehrabi et al., 2021).

Transparency metrics, such as Statistical Parity Difference, confirmed that AI-driven credit scoring must be carefully monitored to ensure equitable outcomes. The results indicate that fairness-aware ML approaches, such as adversarial debiasing and reweighting strategies, can mitigate bias but may slightly reduce predictive accuracy (Dastile et al., 2020)

This study examined the fairness and transparency of machine learning (ML) models in credit scoring, comparing them to traditional statistical approaches. The findings indicate that while ML models, particularly Gradient Boosting Machines and deep learning techniques, improve predictive accuracy over traditional methods, they also introduce significant fairness and transparency challenges (Chen & Guestrin, 2016). Bias analysis revealed that some ML models disproportionately affected certain demographic groups, with disparate impact ratios falling below fairness thresholds (Barocas et al., 2019). Additionally, interpretability techniques such as SHAP values and LIME highlighted concerns about the “black-box” nature of certain models, making it difficult for lenders and regulators to explain individual credit decisions (Molnar, 2020).

While fairness-aware techniques and regulatory compliance mechanisms can mitigate bias, the study underscores the trade-off between accuracy, fairness, and transparency in AI-driven lending decisions. The results highlight the need for ongoing monitoring, ethical considerations, and explainable AI methods to ensure responsible credit assessment practices (Hardt et al., 2016).