As artificial intelligence (AI) continues to evolve, the intersection between AI and human rights has become a critical area of global concern. AI systems are increasingly embedded in decision-making processes—from hiring and healthcare to policing and social services—raising profound questions: Can machines respect human dignity? And how do we ensure that AI supports, rather than undermines, the rights and freedoms of individuals?

The Risk to Rights

AI systems, if not designed and deployed responsibly, can perpetuate bias, reinforce discrimination, and violate privacy. Consider facial recognition technologies that misidentify individuals based on race or gender, or predictive algorithms that influence criminal sentencing with opaque logic. These examples underscore how AI can unintentionally compromise the very rights it is meant to enhance.

The core issue is not that machines are malicious—it’s that AI reflects the values, assumptions, and biases of those who create it. This makes ethical AI design essential. Developers must not only focus on performance and efficiency but also on fairness, accountability, and transparency.

The Role of Ethical AI Design

Ethical AI design involves integrating human rights principles into every stage of the AI lifecycle—from data collection and algorithm development to deployment and monitoring. This includes:

Ensuring fairness: Avoiding bias in data and outcomes.

Protecting privacy: Designing systems with strong data protection and consent mechanisms.

Promoting transparency: Making AI decisions explainable and understandable to users.

Upholding accountability: Establishing clear responsibility for harms caused by AI systems.

Organizations and governments are increasingly recognizing the need for responsible technology. Frameworks like the EU’s AI Act and UNESCO’s Recommendation on the Ethics of Artificial Intelligence aim to guide the development of AI that respects human dignity and rights.

Can Machines Respect Dignity?

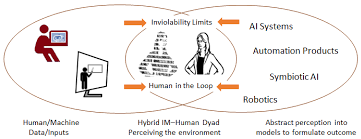

Technically, machines do not have morals or empathy—they don’t “respect” in a human sense. But the systems we build can be designed to operate in ways that are respectful of human rights. This is not about granting AI systems moral agency, but about shaping them through human values and legal standards.

Ultimately, the question of whether machines can respect dignity is really a question about us—our intentions, priorities, and capacity to build technology that serves humanity. The challenge is not just technological but deeply ethical.

A Shared Responsibility

Building AI that aligns with human rights is a shared responsibility. Policymakers must create regulations that enforce rights-based standards. Developers and engineers must commit to ethical design principles. Companies must prioritize human impact over profit. And civil society must hold all stakeholders accountable.

The future of AI does not have to be dystopian. With a commitment to AI human rights, ethical AI design, and responsible technology, we can ensure that machines serve as tools of empowerment—not oppression.