Artificial intelligence (AI) is transforming finance and accounting at an astonishing rate. Over the past year and a half, The Pulse has chronicled how finance organizations are rapidly leveraging both traditional and generative AI (GenAI) to enhance a wide range of finance applications, from SOX compliance to controllership functions like financial close and reporting.

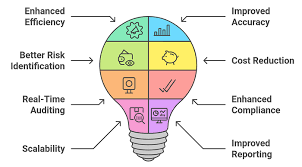

One element connecting all these applications is the financial audit process. When implementing AI for finance and accounting functions, it’s important to consider how it may impact both internal and external audits and to adapt your AI governance and oversight procedures accordingly. We recently explored this topic in our presentation “AI in Accounting and Financial Reporting: How Might it Impact Your Audit?” at the Financial Executives International (FEI) AI conference. Here are some of the takeaways and insights from that session.

The crucial role of AI controls

We’ve all heard about AI’s risks. For finance and accounting functions, these risks typically center on the accuracy of AI-generated outputs and the transparency (organizational awareness) of AI-enabled processes. The global finance system relies on accurate and reliable information from finance and accounting. These teams must have confidence in the data they receive from AI applications and must also demonstrate why their trust is justified—no easy feat. However, there are practical steps that organizations can take to maintain trust and accuracy in their financial reporting processes.

Here are some of the steps around governance procedures and controls that can help organizations support accuracy and reliability of AI-generated financial data:

- Human oversight and transparency: Maintaining human review in the AI lifecycle where possible and being transparent with stakeholders about where and how AI is being used are two pillars of reliability and trustworthiness.

- Data management and audit trail maintenance: Implementing data quality controls is vital for ensuring data used by AI models is accurate, relevant, and reliable. Archiving both inputs and outputs and managing any changes to the AI models or their data sets, can help maintain a thorough audit trail.

- Testing and ongoing monitoring: Implementing robust development, validation, and ongoing monitoring demonstrates to internal and external stakeholders that management is monitoring and addressing risks associated with the use of AI models.

- Documentation and reporting: These procedures not only enable transparency and traceability; they provide a clear record of decisions made around AI models.

Let’s dive a little deeper into each of these areas.

Strategic and efficient human oversight

Human review is essential for AI systems and thoroughly evaluating AI outputs should be standard practice. However, if an AI system consistently proves reliable in initial testing and over time, it may be possible to balance hands-on human oversight with smart, automated monitoring. This can involve setting usage limits and automated checks to flag unusual activity and alert your team when needed. Periodically sampling AI actions can help ensure reliability without overwhelming your team. This approach can maximize AI’s speed and efficiency while maintaining high accuracy and quality.

Data quality practices

The performance and reliability of an AI model hinge on the quality and accuracy of the data you feed into it. Effective AI data management practices are essential to verify data quality and maintain an audit trail for AI transparency. Leading data management practices include:

Gauging performance

Robust testing and monitoring are important for finance and accounting functions. Continuous testing and monitoring of AI systems can help ensure AI outputs meet prescribed accuracy, reliability, and compliance standards. Testing metrics and methods vary by use case and model type, but comparing outputs against expected results can help identify inaccuracies. Organizations working with AI vendors should understand and potentially augment the vendor’s testing and monitoring processes.

Case in point

Let’s look at how governance and controls work in practice to achieve accurate AI outputs. In this example, a tech company’s finance team uses a Computer Vision model and a Large Language Model (LLM) to automate its invoice classification process and evaluate process performance afterwards. The AI process includes these steps:

- The Computer Vision model first extracts features and text from the invoices. The LLM then classifies the extracted text into predefined categories (e.g., utilities, office supplies, travel expenses).

- The company evaluates the performance of its invoice classification process by testing both key components: feature extraction and classification.

- The finance team first evaluates the feature extraction component of the invoice classification process by calculating metrics like character error rate (CER), word error rate (WER), and Levenshtein distance, which measures the minimum number of single-character edits required to change one word into another, using a large testing dataset.

- The finance team also explores other novel methods for evaluating feature extraction, like semantic similarity score (SemScore), which can help the team evaluate how similar or dissimilar the selected invoice is from other similarly categorized invoices.

- Finally, the company evaluates the performance of the LLM in classifying the invoices correctly, using metrics such as accuracy, precision, recall, and F1 score, which measures the harmonic mean of precision and recall.

This case study shows that the testing methods and metrics needed to comprehensively assess the model’s performance were considered and measured. These testing results can demonstrate to risk managers, regulators, auditors, and other stakeholders just how management has gotten comfortable with the performance for the particular use case and considered an acceptable error rate.

Along with rigorous testing and ongoing monitoring of the model’s performance, the company’s finance team has put several other important controls in place. They’re keeping detailed records of all their training, testing, and unlabeled data sets, and have also implemented data versioning and access controls to increase data integrity. Their models are fully documented, covering everything from key decisions and how the model works to known limitations, controls, and any updates made along the way. Finally, they’re transparent with stakeholders about exactly where, how, and when AI is used and, just as importantly, where human oversight still plays a role in the process.

What role can Deloitte play?

The importance of effective AI governance, oversight, and controls can’t be overstated. They are the foundation of transparency, accuracy, and reliability for AI finance systems and data. Deloitte can advise you on how to harness AI responsibly using safeguards like Deloitte’s Trustworthy AI™ framework.