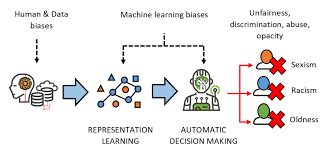

As artificial intelligence (AI) continues to transform industries and daily life, concerns around fairness, accountability, and transparency are becoming more urgent. Among the most pressing issues is algorithmic discrimination—when AI systems produce biased or unfair outcomes that disproportionately impact certain groups. This growing challenge stems from a complex interplay of data quality, design decisions, and systemic inequality, and it calls for equally complex and proactive solutions.

Understanding Algorithmic Bias

At the heart of algorithmic discrimination lies algorithmic bias. This occurs when an AI system exhibits favoritism or prejudice toward individuals based on characteristics like race, gender, age, or socioeconomic status. These biases often originate from training data that reflects historical inequalities or lacks adequate representation of diverse populations.

For instance, an AI-powered hiring tool trained on past hiring data may favor male candidates if the historical data contains gender bias. Similarly, facial recognition software has shown lower accuracy for individuals with darker skin tones, primarily due to underrepresentation in the training datasets. These cases highlight how AI discrimination can perpetuate and even amplify existing social injustices.

How AI Discrimination Happens

There are several ways algorithmic discrimination can manifest:

Biased Training Data: If historical data includes human biases—such as discriminatory policing or hiring practices—AI systems trained on that data will likely replicate those patterns.

Unrepresentative Datasets: When datasets lack diversity, AI systems may perform poorly for underrepresented groups, leading to unequal outcomes.

Problematic Feature Selection: Including certain variables (like ZIP codes, which may correlate with race or income) can inadvertently introduce bias, even if protected characteristics are explicitly excluded.

Lack of Contextual Understanding: AI systems often lack the nuanced understanding necessary to interpret human behavior fairly, leading to flawed decision-making.

The Role of Ethical Data Practices

Preventing algorithmic discrimination starts with ethical data practices. These involve ensuring that data is collected, processed, and used in ways that prioritize fairness, transparency, and inclusivity. Some best practices include:

Auditing Datasets: Regularly evaluate training data for bias and completeness. Remove or adjust data points that could lead to unfair outcomes.

Diverse Development Teams: Involving individuals from varied backgrounds in the design and testing phases can help identify potential biases and blind spots.

Impact Assessments: Conduct algorithmic impact assessments to evaluate how systems affect different demographic groups.

Transparency and Explainability: Design systems that allow stakeholders to understand how decisions are made, and offer recourse when decisions are disputed.

Continuous Monitoring: Bias isn’t a one-time fix. AI systems should be regularly monitored and updated to ensure fair performance over time.

Policy and Regulatory Support

Governments and institutions are beginning to recognize the need for stronger oversight of AI systems. Emerging regulations like the EU’s AI Act and various national-level proposals aim to enforce accountability for high-risk AI applications. These frameworks often mandate fairness audits, documentation of decision processes, and protections for affected individuals.

Moving Toward Fair AI

Combating algorithmic discrimination is not just a technical challenge—it’s a moral imperative. As AI becomes more embedded in our legal, financial, healthcare, and educational systems, the consequences of bias grow more severe.

The good news is that solutions exist. By addressing algorithmic bias head-on, avoiding the pitfalls of AI discrimination, and committing to ethical data practices, developers, organizations, and policymakers can build systems that are not only intelligent but also just.

Fairness in AI isn’t automatic—it’s engineered. And it’s up to us to get it right.