The use of Artificial Intelligence (AI) in credit scoring has revolutionized decision-making in the financial sector, offering efficiency and predictive accuracy. However, the opacity of AI models, particularly those based on machine learning, poses significant challenges related to transparency and accountability. Explainable AI (XAI) has emerged as a critical solution to address these concerns by enabling stakeholders to understand, interpret, and trust AI-driven credit scoring systems. This paper explores the role of XAI in credit scoring, emphasizing its impact on fairness, regulatory compliance, and consumer trust. Through a review of existing methodologies and an analysis of their effectiveness, the research demonstrates how XAI can enhance transparency without sacrificing predictive accuracy. It concludes by discussing challenges and opportunities for implementing XAI in financial decision-making systems.

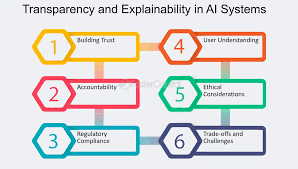

AI-driven credit scoring systems have become integral to the financial industry, offering faster, more accurate assessments of creditworthiness. These systems leverage vast amounts of data and sophisticated machine learning algorithms to predict borrower risk. However, the complexity of these models, often described as “black boxes,” has raised concerns among regulators, financial institutions, and consumers. The lack of transparency in AI-based credit scoring can lead to biased decisions, reduced accountability, and diminished consumer trust. Explainable AI addresses these issues by providing insights into how decisions are made, making AI systems more interpretable and trustworthy. This research examines the role of XAI in improving the transparency and accountability of credit scoring systems, focusing on its potential to balance predictive accuracy with interpretability.

The increasing adoption of AI in credit scoring has highlighted its advantages, including efficiency, scalability, and predictive power. However, studies have consistently pointed to the limitations of traditional machine learning models in terms of explainability. The inability of takeholders to understand model decisions has led to regulatory scrutiny and challenges in consumer trust. Explainable AI has emerged as a solution to these issues, offering tools and methodologies to interpret and visualize decision-making processes. Techniques such as feature

importance analysis, SHAP (Shapley Additive Explanations), and LIME (Local Interpretable Model-agnostic Explanations) have been widely explored in the context of credit scoring. These methods enable stakeholders to understand which variables influence credit decisions and to what extent, providing a foundation for fairness and accountability. However, the literature also notes challenges in implementing XAI, including trade-offs between interpretability and model performance, as well as the need for standardized frameworks.

This research explores the application of XAI in credit scoring through a mixed-methods approach. A sample dataset of credit applications was analyzed using a machine learning model to predict creditworthiness. Post-hoc explainability techniques, including SHAP and feature importance analysis, were applied to interpret the model’s decisions. The effectiveness of these techniques was evaluated based on their ability to provide clear, actionable insights into the decision-making process. Qualitative feedback was also collected from financial professionals to assess the practical utility of XAI tools in real-world scenarios.

The application of XAI techniques revealed that machine learning models in credit scoring can be effectively interpreted without significant loss of predictive accuracy. SHAP analysis provided detailed insights into feature contributions, enabling stakeholders to identify key factors influencing credit decisions. For instance, income level and payment history emerged as the most critical variables, while less significant factors were appropriately downweighted. Feature importance analysis corroborated these findings, offering a high-level overview of model behavior.

Qualitative feedback from financial professionals indicated that XAI tools significantly enhance trust and usability. Stakeholders valued the ability to explain adverse decisions to consumers, improving transparency and mitigating disputes. Furthermore, the integration of XAI methods into credit scoring systems was found to support regulatory compliance by demonstrating fairness and accountability. However, challenges remain, including the computational complexity of XAI techniques and the need for industry-wide standards to ensure consistency.

Explainable AI represents a transformative approach to addressing the challenges of transparency and accountability in AI-driven credit scoring. By providing clear and interpretable insights into decision-making processes, XAI fosters trust among consumers and regulators while maintaining the predictive accuracy of machine learning models. Although challenges related to computational efficiency and standardization persist, the benefits of XAI in promoting fairness and compliance outweigh its limitations. Future research should focus on developing more efficient XAI techniques and establishing standardized frameworks to facilitate widespread adoption in the financial sector.