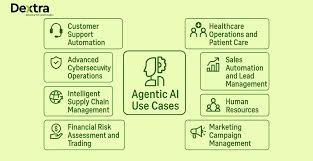

It’s virtually impossible to have a conversation about the future of business without talking about AI. What’s more, the technology is evolving at a furious pace. What started as AI chatbots and image generators are becoming AI “agents”—AI systems that can execute a series of tasks without being given specific instructions. No one knows exactly how all this will play out, but details aside, leaders are considering the very real possibility of wide-scale organizational disruption. It’s an exciting time.

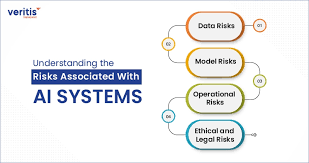

It’s also a nerve-wracking one. Ever-evolving AI brings a new suite of risks, which makes it extraordinarily difficult to meet what I call “The Ethical Nightmare Challenge.” In the face of AI hallucinations, deepfakes, the threat of job loss, IP violations, discriminatory outputs, privacy violations, its black box nature, and more, the challenge asks leaders to:

- Identify the ethical nightmares for their organizations that may result from wide-scale AI use.

- Create the internal resources that are necessary for nightmare avoidance.

- Upskill employees so they can use those resources, along with their updated professional judgment, to avoid those nightmares.

The challenge is significant, but the reward—wide-scale, safe deployment of transformative technologies—is worth it.

Agentic AI makes rising to this challenge more urgent: It introduces compounding risks that, if not managed, can create business and brand-defining disasters. As someone who helps companies navigate the ethical risks posed by new technologies, I’ve seen the mistakes companies often make as they try to meet this problem, and what should be done instead. Often, that requires significant changes throughout a company, not merely a risk assessment to be tacked onto existing assessments.

How Ethical Nightmares Multiply with AI Advances

At most organizations, AI risk management was developed to tackle the potential harms of narrow AI—and this has remained the foundation for how AI risk is handled. To protect their organizations as they adopt new AI tools, leaders need to understand how the risk landscape changes as we move to generative AI, and how narrow and generative AI serve as building blocks for a dizzying array of possibilities that lead to a minefield.

Narrow AI

Narrow AI, also called “traditional” and “predictive” AI, is in the business of making predictions in a narrow domain. Well-known examples include facial recognition software and scoring models (e.g., predicting the risk of defaulting on a mortgage, likelihood of being a good candidate for the job, and so on). With narrow AI, as I’ve previously written about, prominent ethical risks include biased or discriminatory outputs, an inability to explain how AI arrives at its outputs, and privacy violations.

At most companies, risk programs started by focusing on mitigating the possible harms of narrow AI. There are four important things to understand about how those were constructed so we can see where they fail when it comes to generative AI and beyond:

*The context for how a narrow AI will be used tends to be understood in advance. For instance, if you’re developing a resume-reading AI, chances are it will be used in hiring. (In other cases it can be less clear—e.g., developing facial recognition software that can be used in a variety of contexts.)

*Data science expertise is needed to perform risk assessments, monitor performance, mitigate risk, and explain how the AI works. Downstream users, on the other hand, play a role in submitting data to the tool, where in all likelihood they did not create the data themselves (e.g., the HR professional didn’t write the resumes, the insurance professional didn’t fill out the application, and so on).

*There’s often an expert “human in the loop” checking AI outputs before they’re acted upon. For instance, while an AI may predict the likelihood of someone developing diabetes in the next two years, a doctor interprets that output and offers the relevant advice/prescriptions to the patient. The outputs are generated at a pace the human can handle, and they have the capacities to responsibly vet the output.

*Monitoring and intervention can be relatively straightforward. Tools for assessing performance abound, and if the AI is performing poorly on some relevant metric, you can stop using it. The disruption caused by ceasing to use the tool is fairly contained.

Generative AI

With generative AI, however, things change rather drastically:

*The contexts of deployment explode. People use LLMs for all sorts of things. Think of the many ways they may be used in every company by every department by every role for every task. Then add a few thousand more for good measure. This makes testing for “how will the model perform in the intended context of use” phenomenally difficult to determine.

*Monitoring the AI in the wild becomes immensely important. Because there are so many contexts of deployments, developers of AI cannot possibly foresee all of them, let alone introduce appropriate risk mitigations for them all. Monitoring the AI as it behaves in these unpredictable contexts—before things go off the rails with no one noticing—becomes crucial.

*A human in the loop is still relatively straightforward—but increased training is needed. For instance, LLMs routinely make false statements (a.k.a. “hallucinations”). If users are not appropriately trained to fact check LLM outputs, then organizations should expect employees to regularly create both internal- and external-facing reports and other documents with false information.

*Gen AI requires extra training to use responsibly. That’s because while narrow AI’s outputs are primarily a function of how data scientists built the AI, generative AI’s outputs are largely a function of the prompts that end users enter; change the prompt and you’ll change the outputs. This means that responsible prompting is needed (e.g., not putting company sensitive data into an LLM that sends the data to a third party).

*Risk assessment and mitigation happens in more places. For the most part, organizations are procuring models from companies like Microsoft, Google, Anthropic, or OpenAI rather than building their own. Developers at those companies engage in some degree of risk mitigation, but those mitigations are necessarily generic. When enterprises have their own data scientists modify the pre-trained model—either at the enterprise or department level—they also create the need for new risk assessments. This can get complex quickly. There are multiple points at which such assessments can be performed, and it’s not clear whether they’re needed before and after every modification. Who should perform what risk assessment at what point in this very complex lifecycle of the AI is difficult to determine not only in itself, but also because that decision must be made while balancing other important considerations—e.g., operational efficiency.

For those organizations that have built AI ethical risk/Responsible AI programs, the risks discussed so far are covered (though how well they’re covered/managed depends on how well the program was built, deployed, and maintained). But as AI evolves, those programs begin to break under the strain of massively increased complexity, as we’ll see.