AI safety institutes have a vital role to play in bringing together technical, ethical expertise from around the world and develop voluntary, interoperable standards.

One of the key benefits of AI in advertising is scale. Mondelez India, for instance, created half a million AI-powered personalised animated videos for its Silk Valentine’s Day campaign.

As artificial intelligence (AI) advances, countries worldwide are recognising the need for national and international governance to address its benefits and challenges. One of the key questions in AI governance is how to foster innovation and cross-border trade, while upholding fundamental values and rights. We argue that the International Network of AI Safety Institutes (INASI) is the right forum to bring together technical and ethical expertise from around the world to develop and adopt voluntary, interoperable standards for AI — standards that are both technical and ethical.

Despite growing attention to the need for effective AI governance, there is little consensus on how to achieve it. Recent global agreements, such as UNESCO’s AI Recommendation and the Organization for Economic Cooperation and Development’s AI Principles, acknowledge fundamental values such as human dignity, autonomy, fairness, and transparency. But the broad and ambiguous language of these frameworks leaves room for varying interpretations, reflecting different political and ethical priorities. Key obstacles to establishing global rules for AI governance include competing national interests, divergent regulatory philosophies, absence of robust accountability mechanisms, militarisation of AI, technological hegemony, and limited political will.

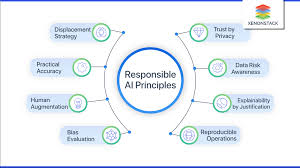

c Standards, such as those developed by the International Organization for Standardization, International Electrotechnical Commission, and Institute of Electrical and Electronics Engineers, define requirements for products, services or processes and thus lay the foundation for technical procurement and product development. They promote the rapid transfer of technologies from research to application and open markets for companies. At the same time, standards ensure interoperability, create transparency and trust in the application of technologies, and support communication between all parties involved by using uniform terms and concepts.

Efforts to standardise AI systems are in full swing. International and national standard developing organisations are developing standards addressing various aspects of AI, including risk management, data quality, algorithmic bias, explainability, human oversight, cybersecurity, and robustness. In the same vein, the European Union’s AI Act relies on the idea of co-regulation through standardisation to ensure high-risk AI systems and — in the long term — also large language models and other foundation models comply with the regulation.

All these standards are not purely technical. Developing standards for data quality to mitigate bias requires not only technical expertise but also an understanding of the kinds of discrimination we want to avoid. Similarly, crafting standards for explainability and human oversight involves defining the levels of transparency and oversight that are ethically and legally acceptable. In this scenario, international standardisation efforts profoundly impact not only companies, but also citizens and societies by shaping the ethical and legal boundaries of AI technologies.

INASI was launched in November 2024 to reach an international consensus on AI safety. The initiative brings together AI safety institutes from Australia, Canada, the European Commission, France, Japan, Kenya, the Republic of Korea, Singapore, the United Kingdom, and the United States. Its purpose is to drive AI safety, identify risks and propose solutions to mitigate such risks. It is the leading forum for international cooperation to facilitate a common technical understanding of AI safety, and development of interoperable principles and global best practices. A key missing piece of the puzzle is the absence of India, whose inclusion will be important to have a truly representative international forum for AI standard setting.

India is uniquely positioned to play a transformative role in shaping global AI governance. As the leading voice in the Global South, India combines the strengths of being one of the largest AI-first start-up ecosystems, the fourth-largest economy, and a global hub for AI research and innovation. Its recent leadership in international fora such as the G20 Summit and the Global Partnership on Artificial Intelligence underscores its ability to bridge the perspectives of developing and developed nations. These platforms have highlighted India’s commitment to inclusive digital transformation and the development of equitable AI frameworks. The government’s recent announcement of the establishment of an AI safety institute will allow India to devote more dedicated resources for addressing societal risks, setting global standards, and enhancing domestic capacity which aligns seamlessly with global best practices.

India’s participation in initiatives like INASI will be crucial to ensure its voice is central to shaping foundational AI governance principles. By promoting the inclusion of experts from the Global South and emphasising the transformative potential of AI in sectors such as healthcare, education, and agriculture, India can champion an equitable approach to AI development. Its endorsement of global agreements will be pivotal for their adoption, reflecting its unique capacity to lead a dialogue that ensures AI technologies benefit all, particularly underrepresented regions.

AI safety institutes will play a critical role towards international standard setting. Presently, there isn’t one single model that has been proposed globally. Our suggestion is that the international model must be inclusive, ensure that regions from both the Global North and Global South find their way into the conversation (much like G20), and that both developing and developed nations operate on an equal footing. This will be important to ensure interoperability of standards and future regulations.