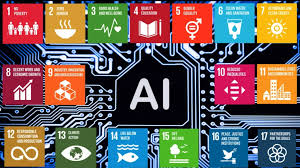

The emergence of artificial intelligence (AI) is shaping an increasing range of sectors. For instance, AI is expected to affect global productivity, equality and inclusion, environmental outcomes, and several other areas, both in the short and long term. Reported potential impacts of AI indicate both positive and negative impacts on sustainable development. However, to date, there is no published study systematically assessing the extent to which AI might impact all aspects of sustainable development—defined in this study as the 17 Sustainable Development Goals (SDGs) and 169 targets internationally agreed in the 2030 Agenda for Sustainable Development. This is a critical research gap, as we find that AI may influence the ability to meet all SDGs.

Here we present and discuss implications of how AI can either enable or inhibit the delivery of all 17 goals and 169 targets recognized in the 2030 Agenda for Sustainable Development. Relationships were characterized by the methods reported at the end of this study, which can be summarized as a consensus-based expert elicitation process, informed by previous studies aimed at mapping SDGs interlinkages. A summary of the results and the Supplementary Data 1 provides a complete list of all the SDGs and targets, together with the detailed results from this work. Although there is no internationally agreed definition of AI, for this study we considered as AI any software technology with at least one of the following capabilities: perception—including audio, visual, textual, and tactile (e.g., face recognition), decision-making (e.g., medical diagnosis systems), prediction (e.g., weather forecast), automatic knowledge extraction and pattern recognition from data (e.g., discovery of fake news circles in social media), interactive communication (e.g., social robots or chat bots), and logical reasoning (e.g., theory development from premises). This view encompasses a large variety of subfields, including machine learning.

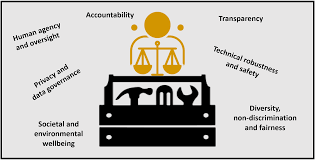

The more we enable SDGs by deploying AI applications, from autonomous vehicles41 to AI-powered healthcare solutions and smart electrical grids13, the more important it becomes to invest in the AI safety research needed to keep these systems robust and beneficial, so as to prevent them from malfunctioning, or from getting hacked. A crucial research venue for a safe integration of AI is understanding catastrophes, which can be enabled by a systemic fault in AI technology. For instance, a recent World Economic Forum (WEF) report raises such a concern due to the integration of AI in the financial sector. It is therefore very important to raise awareness on the risks associated to possible failures of AI systems in a society progressively more dependent on this technology. Furthermore, although we were able to find numerous studies suggesting that AI can potentially serve as an enabler for many SDG targets and indicators, a significant fraction of these studies have been conducted in controlled laboratory environments, based on limited datasets or using prototypes. Hence, extrapolating this information to evaluate the real-world effects often remains a challenge. This is particularly true when measuring the impact of AI across broader scales, both temporally and spatially. We acknowledge that conducting controlled experimental trials for evaluating real-world impacts of AI can result in depicting a snapshot situation, where AI tools are tailored towards that specific environment. However, as society is constantly changing (also due to factors including non-AI-based technological advances), the requirements set for AI are changing as well, resulting in a feedback loop with interactions between society and AI. Another underemphasized aspect in existing literature is the resilience of the society towards AI-enabled changes. Therefore, novel methodologies are required to ensure that the impact of new technologies are assessed from the points of view of efficiency, ethics, and sustainability, prior to launching large-scale AI deployments. In this sense, research aimed at obtaining insight on the reasons for failure of AI systems, introducing combined human–machine analysis tools, are an essential step towards accountable AI technology, given the large risk associated to such a failure.

Although we found more published evidence of AI serving as an enabler than as an inhibitor on the SDGs, there are at least two important aspects that should be considered. First, self-interest can be expected to bias the AI research community and industry towards publishing positive results. Second, discovering detrimental aspects of AI may require longer-term studies and, as mentioned above, there are not many established evaluation methodologies available to do so. Bias towards publishing positive results is particularly apparent in the SDGs corresponding to the Environment group. A good example of this bias is target 14.5 on conserving coastal and marine areas, where machine-learning algorithms can provide optimum solutions given a wide range of parameters regarding the best choice of areas to include in conservation networks. However, even if the solutions are optimal from a mathematical point of view (given a certain range of selected parameters), additional research would be needed to assess the long-term impact of such algorithms on equity and fairness, precisely because of the unknown factors that may come into play. Regarding the second point stated above, it is likely that the AI projects with the highest potential of maximizing profit will get funded. Without control, research on AI is expected to be directed towards AI applications where funding and commercial interests are. This may result in increased inequality. Consequently, there is the risk that AI-based technologies with potential to achieve certain SDGs may not be prioritized, if their expected economic impact is not high. Furthermore, it is essential to promote the development of initiatives to assess the societal, ethical, legal, and environmental implications of new AI technologies.

Substantive research and application of AI technologies to SDGs is concerned with the development of better data-mining and machine-learning techniques for the prediction of certain events. This is the case of applications such as forecasting extreme weather conditions or predicting recidivist offender behavior. The expectation with this research is to allow the preparation and response for a wide range of events. However, there is a research gap in real-world applications of such systems, e.g., by governments (as discussed above). Institutions have a number of barriers to the adoption AI systems as part of their decision-making process, including the need of setting up measures for cybersecurity and the need to protect the privacy of citizens and their data. Both aspects have implications on human rights regarding the issues of surveillance, tracking, communication, and data storage, as well as automation of processes without rigorous ethical standards. Targeting these gaps would be essential to ensure the usability and practicality of AI technologies for governments. This would also be a prerequisite for understanding long-term impacts of AI regarding its potential, while regulating its use to reduce the possible bias that can be inherent to AI.

Furthermore, our research suggests that AI applications are currently biased towards SDG issues that are mainly relevant to those nations where most AI researchers live and work. For instance, many systems applying AI technologies to agriculture, e.g., to automate harvesting or optimize its timing, are located within wealthy nations. Our literature search resulted in only a handful of examples where AI technologies are applied to SDG-related issues in nations without strong AI research. Moreover, if AI technologies are designed and developed for technologically advanced environments, they have the potential to exacerbate problems in less wealthy nations (e.g., when it comes to food production). This finding leads to a substantial concern that developments in AI technologies could increase inequalities both between and within countries, in ways which counteract the overall purpose of the SDGs. We encourage researchers and funders to focus more on designing and developing AI solutions, which respond to localized problems in less wealthy nations and regions. Projects undertaking such work should ensure that solutions are not simply transferred from technology-intensive nations. Instead, they should be developed based on a deep understanding of the respective region or culture to increase the likelihood of adoption and success.